HDFS: The Hadoop File System¶

HDFS is the underlying distributed filesystem that you will use to run your applications so they will take advantadge of parallel data processing.

HDFS is optimized for large sequencial reads and the best performance is obtained on large files (>1GB).

The files are split in blocks that by default have a block size of 128MB and blocks are replicated across multiple nodes, guaranteeing fault-tolerance in case of a node failure.

By default each block will have 3 replicas but you can control the amount of replicas when you create the file.

We recommend that you upload the files first to the BD HOME filesystem using the DTN server and then from there you copy them to HDFS. See the How to upload data section for more information.

To put a file in HDFS you can run the following command:

hdfs dfs -put file.txt file.txt

To list files:

hdfs dfs -ls

To create a directory:

hdfs dfs -mkdir mydir

To get a file from HDFS to the local filesystem:

hdfs dfs -get file.txt

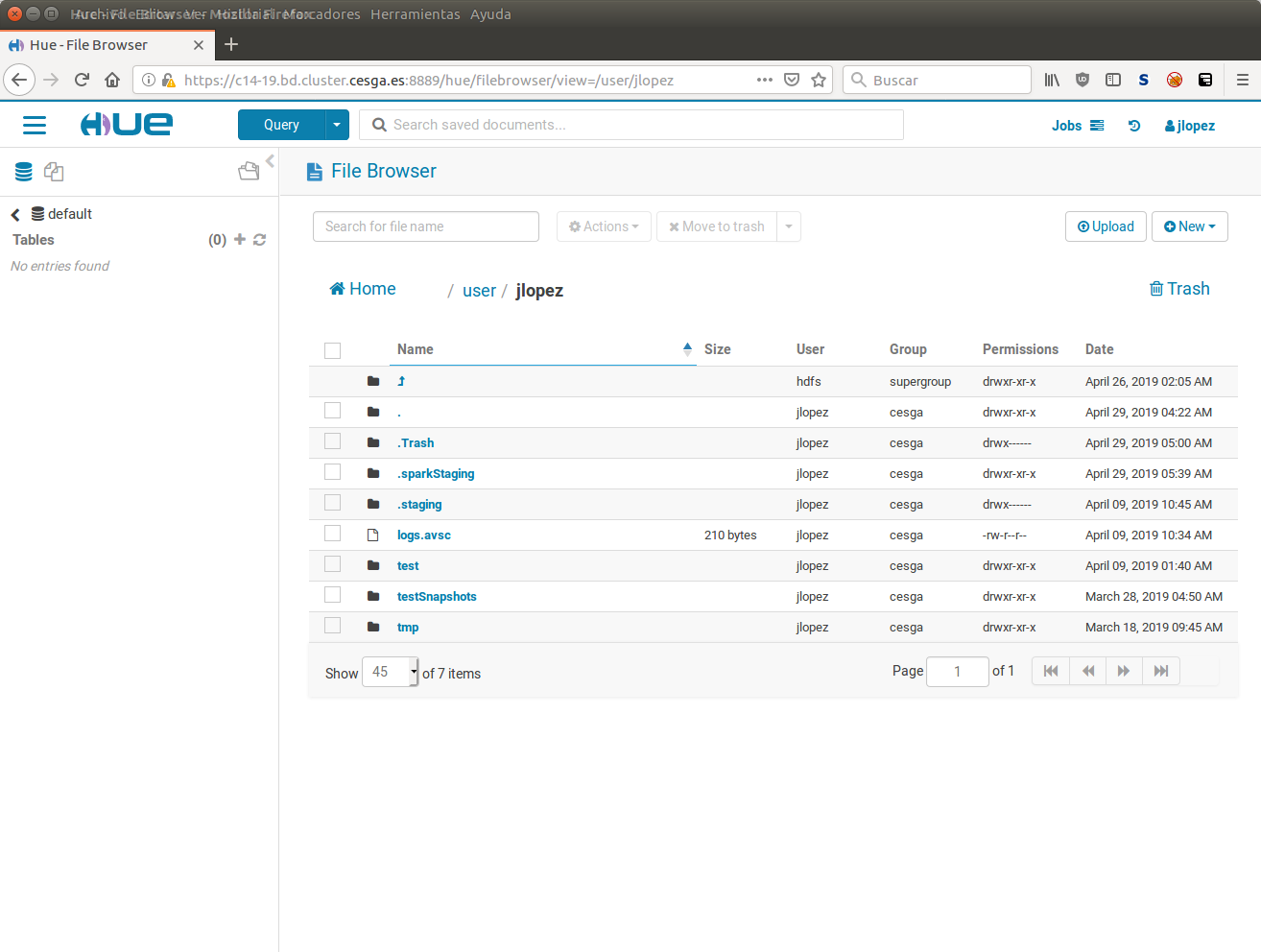

Using HUE: A nice graphical interface to Hadoop you have a nice Web UI to explore HDFS, you can access it through the BD|CESGA WebUI.

Exploring HDFS from HUE.

For further information on how to use HDFS you can check the HDFS Tutorial that we have prepared to get you started and the Hadoop Documentation as reference.