Spark¶

Spark is probably the most popular tool in the Hadoop ecosystem nowdays. Apart from the performance improvements it offers over standard MapReduce jobs, it simplifies considerably the process of developing an application because it offers high level programming APIs like the DataFrames API.

The default version included in the platform is Spark 2.4.0, and you can easily start an interactive shell:

spark-shell

Similarly to start an interactive session using Python:

pyspark

You can also use newer versions of Spark provided through Modules: Additional Software, for example:

module load spark/3.4.2

Note

If using Python we recommend that you use an Anaconda version provided through Modules: Additional Software.

To use it with Python you can load the desired version of the Anaconda module, for example:

module load anaconda3/2020.02

If using Anaconda, then you can also use ipython for the interactive pyspark session so you get a nicer CLI:

PYSPARK_DRIVER_PYTHON=ipython pyspark

To submit a job:

spark-submit --name testWC test.py input output

The jobs will be submitted to YARN and queued for execution. Depending on the load of the platform the execution will take more or less time.

Note

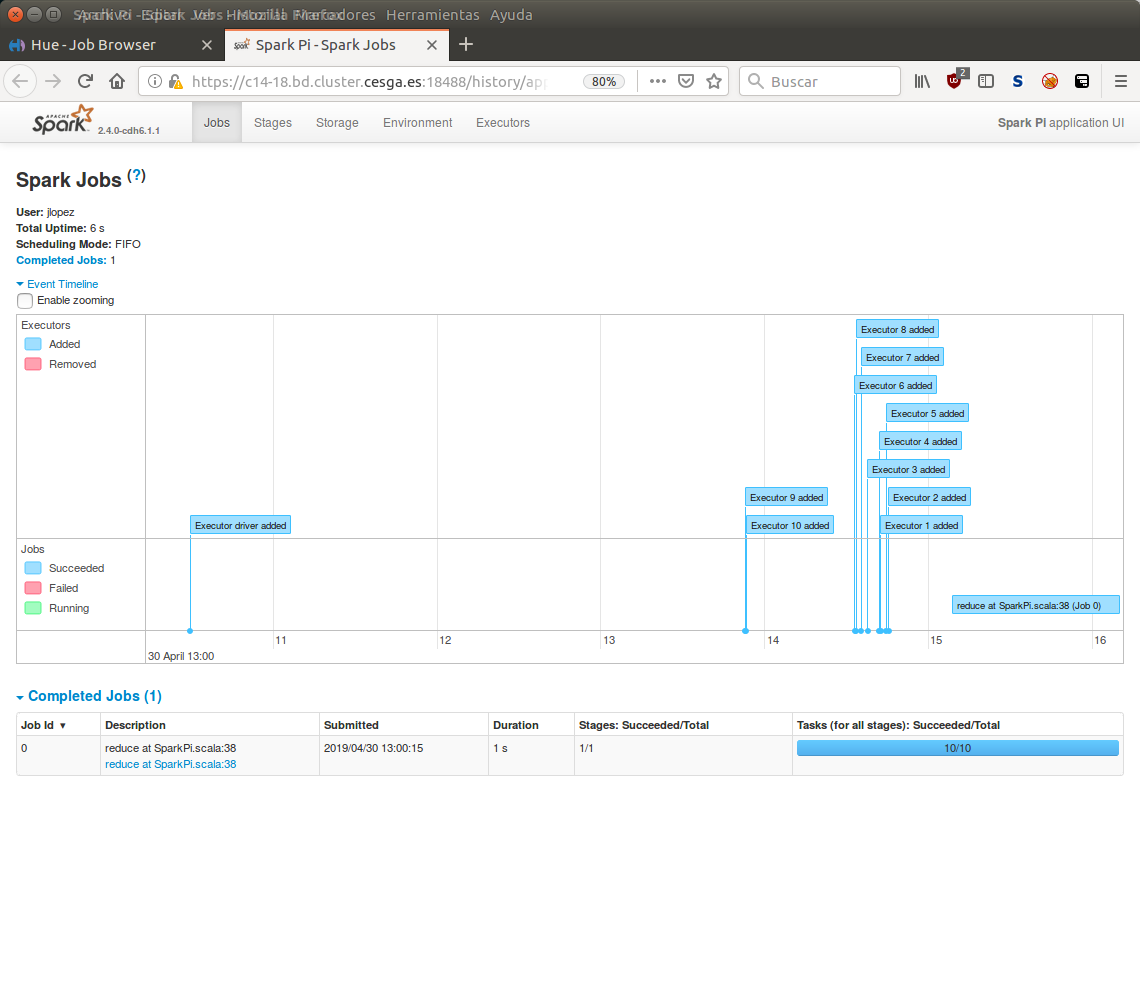

You can acess the Spark UI through the BD|CESGA WebUI and HUE: A nice graphical interface to Hadoop.

The Spark UI showing details of a given job.

For further information on how to use Spark you can check the Spark Tutorial that we have prepared to get you started. For more information you can check the PySpark Course Material and the Sparklyr Course Material, these are courses that you can also attend to learn more. Finally, you can also find useful the Spark Guide in the CDH documentation, and of course, the great documentation provided by the Spark project.